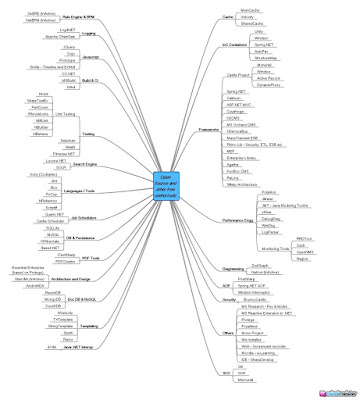

Open source / Free tools and frameworks (for .NET)

It's been a while now since my last post. In 2009-10, I was out of the country for 7 months on consulting assignments. Got an opportunity to learn exciting technologies and work on tight deadlines, but it drained me quite a bit too! 2010 has been a roller-coaster so far with more emphasis on personal commitments, but now things are in a steady state and I am back to blogging. As you can see, my blog has undergone few changes - new template, comment moderation, links to websites, etc. One feature I could not figure out is "highlighting of author's comments", I tried the approach given here , but it did not work for me. It would be awesome if you can leave me a tip. Ok, now let's start off with the post. In my current assignment, I am delighted to work with a couple of seasoned .NET technical architects. One of them (will link him once I get his profile) shared the above mind-map of open source and free tools and frameworks for .NET applications in general. The mind